How do our brains process speech?

Summary

Without a question, the ability of humans to produce impressive speeches in such a short amount of time is simply extraordinary. According to Ted-video ed’s “How do our brains interpret speech?” we can now understand more about the complexity of speech comprehension. The typical 20-year-old speaks in less than a second and has a vocabulary of between 27,000 and 52,000 words. Because our brain makes decisions so quickly and correctly 98% of the time, it’s crucial to understand how it works. This video’s main point is that, like GPUs, our brains are adept at parallel processing. Let’s begin by supposing that our brains contain a language unit that assesses the possibility that incoming speech will contain that word. Each word processing unit in the brain is analogous to the firing pattern of a collection of nerves in the cerebral cortex. We begin to make assumptions about a word’s specific meaning as soon as we hear its beginning. If a word starts with pro, for instance, it might refer to a professor, a professional, or a possibility. And after hearing more of the word, you’ll probably be able to identify it. In addition, your brain will work to determine the meaning of the word while the active unit temporarily disables other, non-activated units for a few milliseconds. We can better understand a sentence’s precise meaning by using context. It’s also crucial to understand how we pick up new words. The hippocampus, which is a long way from the primary language processing system, will first store new words. And both new and old words will be linked to the language processing network after many nights of sleep.

Reflection

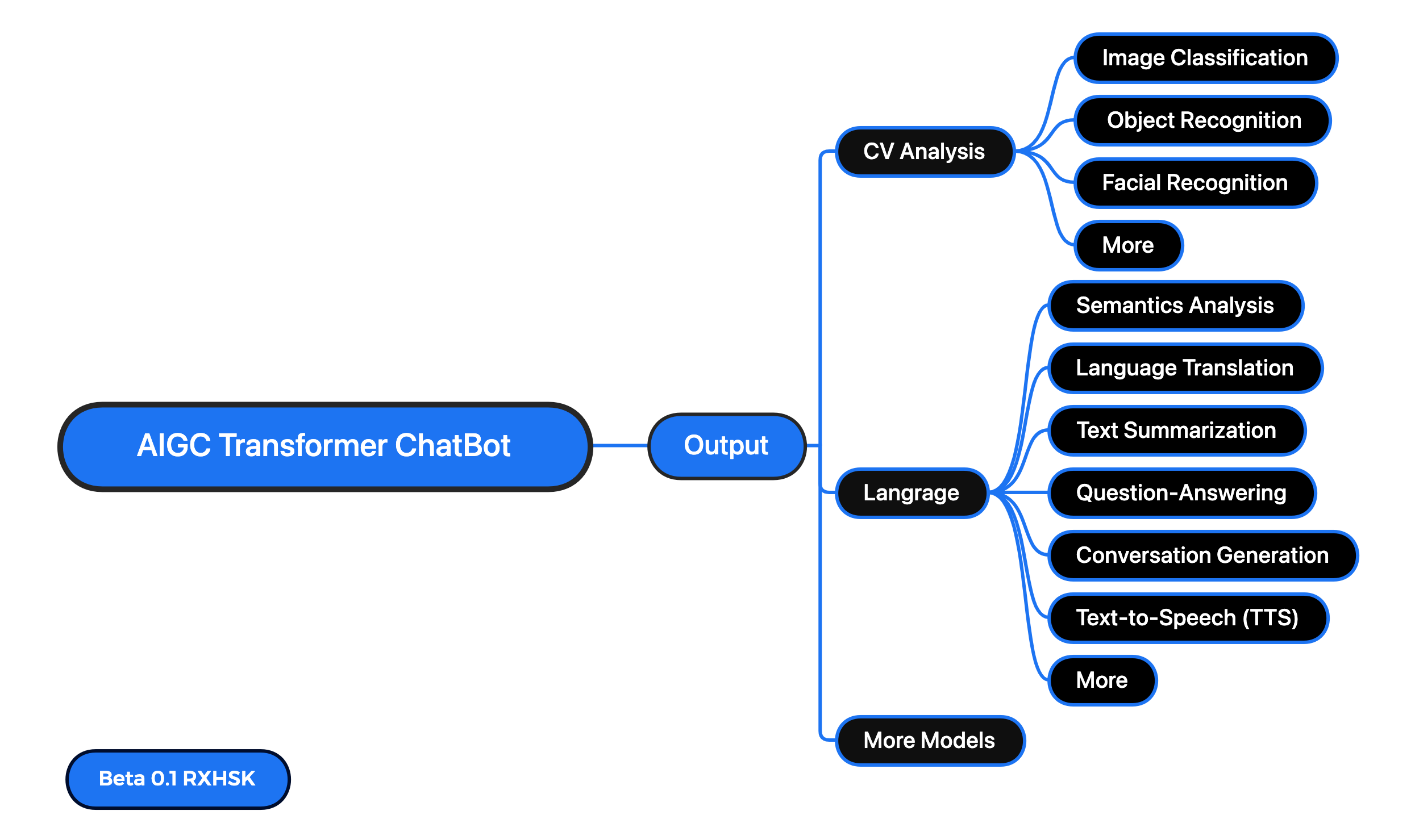

Without a doubt, NLP (Natural Language Process) applications are becoming more and more important in everyone’s daily lives. The way we think about what AI is capable of is evolving, for example, thanks to Chatbots like New Bing, Notion AI, and ChatGPT from OpenAI. People use it to write essays, code, create creative stories, plan journeys, learn new things, get online tips, and even control robots. And the concept of NLP is mostly similar to how humans use and analyze language. One of my future goals is to build artificial general intelligence (AGI), so learning this knowledge is very necessary and interesting. I did learn a lot of useful information from that, which included some optimization points that we can do in the NLP chatbot system that we now have. For example, to make the AI’s response faster, we can let the model predict users’ words when they speak. Over time, our model can predict words faster and users can get a better user experience. There is a connection with what we learn in class. For example, new words will be saved in the hippocampus, as we learned in class, we know that the hippocampus is a part that your brain that mainly stores long-term memory. Before I watch this video, I have no idea why a human can produce a speech in such a short time, and now I also realized that between accuracy and speed, as fast as possible and low power consumption is sometimes a more superior answer for our brain. Finally, I do have one question about how we actually optimize the LLM (large langrage model) after we understand all these knowledge to let the whole system getting better to finally help human discover better knowledge system.